Showing posts with label HADOOP. Show all posts

Showing posts with label HADOOP. Show all posts

Tuesday, June 26, 2018

Monday, May 21, 2018

Steps for calling a python script in another python script

We will see how to call one python script in another python

script with a simple example below

Python script 1:

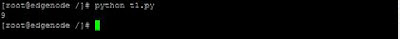

t1.py

The above script will print the value of "a"

The output of python script 1:

To use this python script in another python script:

Use import package to import the python script in another script as below

t2.py

In the above script

import t1: will import the python script in t1.py to t2.py

b = str(t1.a): will initialize the output of t1.py to the variable "b"

print (b): Prints the value of "b"

The output of python script 2:

Displaying the output of both python scripts.

script with a simple example below

Python script 1:

t1.py

The above script will print the value of "a"

The output of python script 1:

To use this python script in another python script:

Use import package to import the python script in another script as below

t2.py

In the above script

import t1: will import the python script in t1.py to t2.py

b = str(t1.a): will initialize the output of t1.py to the variable "b"

print (b): Prints the value of "b"

The output of python script 2:

Displaying the output of both python scripts.

Friday, May 18, 2018

Sunday, May 13, 2018

Steps for connecting to Hbase using Python

Note: First we need to start the HBase thrift server

Starting the thrift server:

Open putty and run the below command to start the thrift server

/usr/hdp/current/hbase-master/bin/hbase-daemon.sh start thrift

Go to python prompt as below:

Connecting to HBase:

Run the following below in python prompt

import happybase

connection = happybase.Connection('localhost',9090,autoconnect=False)

connection.open()

Note: For connecting HBase "happybase" module must be present in our python

Printing the list of tables in HBase:

Use the below command to print the list of tables in HBase

print(connection.tables())

Connecting to the table and printing the rows in the table:

Friday, April 27, 2018

Attunity Replicate server installation and configuration

Automating and accelerating data pipelines for data

lakes and the cloud

Attunity is changing data integration with the latest

release of the Attunity platform to deliver data, ready for analytics, to

diverse platforms on-premises and in the Cloud.

Unlike the traditional batch-oriented and inflexible ETL

approaches of the last decade, Attunity provides the modern, real-time

architecture you need to harness the agility and efficiencies of new data lakes

and cloud offerings.

Streaming:

Universal Stream Generation

Databases can now publish events to all major streaming services, including Kafka, Confluent, Amazon Kinesis, Microsoft Azure Event Hub, MapR Streams.

Databases can now publish events to all major streaming services, including Kafka, Confluent, Amazon Kinesis, Microsoft Azure Event Hub, MapR Streams.

Optimized Data Streaming

Flexible message formats including JSON and AVRO along with the separation of data and metadata into separate topics allows for smaller data messages and easier integration of metadata into various schema registries.

Flexible message formats including JSON and AVRO along with the separation of data and metadata into separate topics allows for smaller data messages and easier integration of metadata into various schema registries.

Cloud:

AWS S3 and Kinesis

Data can now land in S3 either in bulk load, change data capture, or published to Amazon Kinesis

Data can now land in S3 either in bulk load, change data capture, or published to Amazon Kinesis

Snowflake Data Loading

The entire catalog of Attunity supported data sources can now feed a Snowflake data warehouse in bulk load or via change data capture.

The entire catalog of Attunity supported data sources can now feed a Snowflake data warehouse in bulk load or via change data capture.

Preferred data movement solution for Amazon Web Services

and Microsoft Azure

Deep partnerships and broad product integration with industry leaders

Deep partnerships and broad product integration with industry leaders

Data Lakes:

Automate the Creation of Analytics-Ready Data Lakes

Data lands in optimized time based partitions and Attunity automatically creates the schema and structures in the Hive Catalog for Operational Data Stores (ODS) and Historical Data Stores (HDS) – with no manual coding. Supported distributions include Hortonworks, AWS Elastic MapReduce, and Cloudera.

Data lands in optimized time based partitions and Attunity automatically creates the schema and structures in the Hive Catalog for Operational Data Stores (ODS) and Historical Data Stores (HDS) – with no manual coding. Supported distributions include Hortonworks, AWS Elastic MapReduce, and Cloudera.

Ensure Data Consistency

Attunity automatically reconciles continuous data inserts, updates and deletions, while providing ACID compliance, without manual coding or disruption. Attunity also recognizes and responds to source data structure changes (DDL) and automatically applies changes to your data lake.

Attunity automatically reconciles continuous data inserts, updates and deletions, while providing ACID compliance, without manual coding or disruption. Attunity also recognizes and responds to source data structure changes (DDL) and automatically applies changes to your data lake.

Enterprise Management & Control:

Enterprise-wide Control and Management

Scalability to thousands of tasks; resiliency and recovery to maintain data integration processes across multiple data centers and hybrid cloud environments

Scalability to thousands of tasks; resiliency and recovery to maintain data integration processes across multiple data centers and hybrid cloud environments

Performance and Data Flow Analytics

Comprehensive historical and real-time reporting for improved capacity planning and performance monitoring of all data flows

Comprehensive historical and real-time reporting for improved capacity planning and performance monitoring of all data flows

Operational Metadata Creation and Discovery

Central repository shared across the Attunity platform and with third party tools for enterprise-wide reporting

Central repository shared across the Attunity platform and with third party tools for enterprise-wide reporting

Micro services API

New REST and .NET APIs designed for invoking and managing

Attunity services using a standard web-based UI

New REST and .NET APIs designed for invoking and managing

Attunity services using a standard web-based UI

Downloading the

software:

https://4web.s3.amazonaws.com/Files/Replicate/AttunityReplicate_Express_Linux_X64.rpm

Installing Attunity

Prerequisite

1. Windows or Linux 64-bit

Server (dedicated to running 'Attunity Replicate' software)

- Located in same local network as Source

Database(s)

- Will host 'Attunity Replicate' software

(download available via Welcome Email sent automatically when one

activates the AMI)

- Minimum Hardware Requirements:

- Windows Server 2008 R2, 2012 or 2012 R2

- Red Hat Linux Enterprise Linux 6.2 and

above

- SUSE Linux 11 and above

- Quad-Core processor, 8 GB RAM, and 320

GB of disk space.

2. Attunity

Replicate Console is web-based and requires one of the following browsers:

- Microsoft Internet Explorer Version 9 or

higher

- Mozilla Firefox Version 38 and above

- Google Chrome

Installation:

Run the downloaded rpm "AttunityReplicate_Express_Linux_X64.rpm" as below

Verifying that the Attunity Replicate Server is Running:

Accessing the Attunity Replicate Express Console:

Attunity

Replicate Server on Windows:

https://<computer name>/AttunityReplicate

Attunity Replicate Server on Linux:

https://<computer name>:<port>/AttunityReplicate

Where <computer name> is the name or IP address of the computer where the Attunity Replicate Server is installed and <port> is the C UI Server port (3552 by default).

https://<computer name>/AttunityReplicate

Attunity Replicate Server on Linux:

https://<computer name>:<port>/AttunityReplicate

Where <computer name> is the name or IP address of the computer where the Attunity Replicate Server is installed and <port> is the C UI Server port (3552 by default).

Open browser and

type below url

https://localhost:3552/AttunityReplicate

Setting console password:

Set the password as below and restart the server

Open the console now

Configuring Source:

Open the 'Attunity Replicate Console' and select 'Manage Endpoint

Connections'

Click ‘New Endpoint Connection'

Configure with 'Add Database' with these values:

Test the connection and click on save

Configuring Target:

Open the 'Attunity Replicate Console' and select 'Manage Endpoint

Connections'

Click ‘New Endpoint Connection'

Configure with 'File' with these values:

Test the connection and click on save

Creating, Running, and

Monitoring Replicate Tasks

1: Open Attunity Replicate and select 'New Task'. In the 'New

Task' window, enter a unique name, then select 'OK'.

2: Drag your recently configured source database to the 'Drop

source database here' area on the right.

3: Drag your

recently configured target database to the 'Drop target database here' area

on the right

4: Select 'Table Selection' on the right. The

'Select Tables' window opens.

5: From the 'Schema' drop-down

list, select a schema.

6: Click 'Search' to find

the tables in the Schema. You may then 'add' /

'remove' / 'add all' / 'remove all'. Then click 'OK'.

Note: As this is trail version all the features are not available. Attunity Replicate is not an open source software.

Subscribe to:

Posts (Atom)

Opatch reports 'Cyclic Dependency Detected' error when patching ODI Issue: When applying a Patch Set Update (PSU) to WebLogic Se...

-

1. Creating a directory in hdfs $ hdfs dfs -mkdir <paths> 2. List the directories in hdfs $ hdfs dfs -l...

-

Prerequisites: 1. Java jdk 1.6+ 2. Maven 3.3.9 3. Hadoop 2.x Installing Oozie 4.3...

-

After configuring SSL with Custom Identity and Trust Keystores and when we try to start the servers getting the below error ...